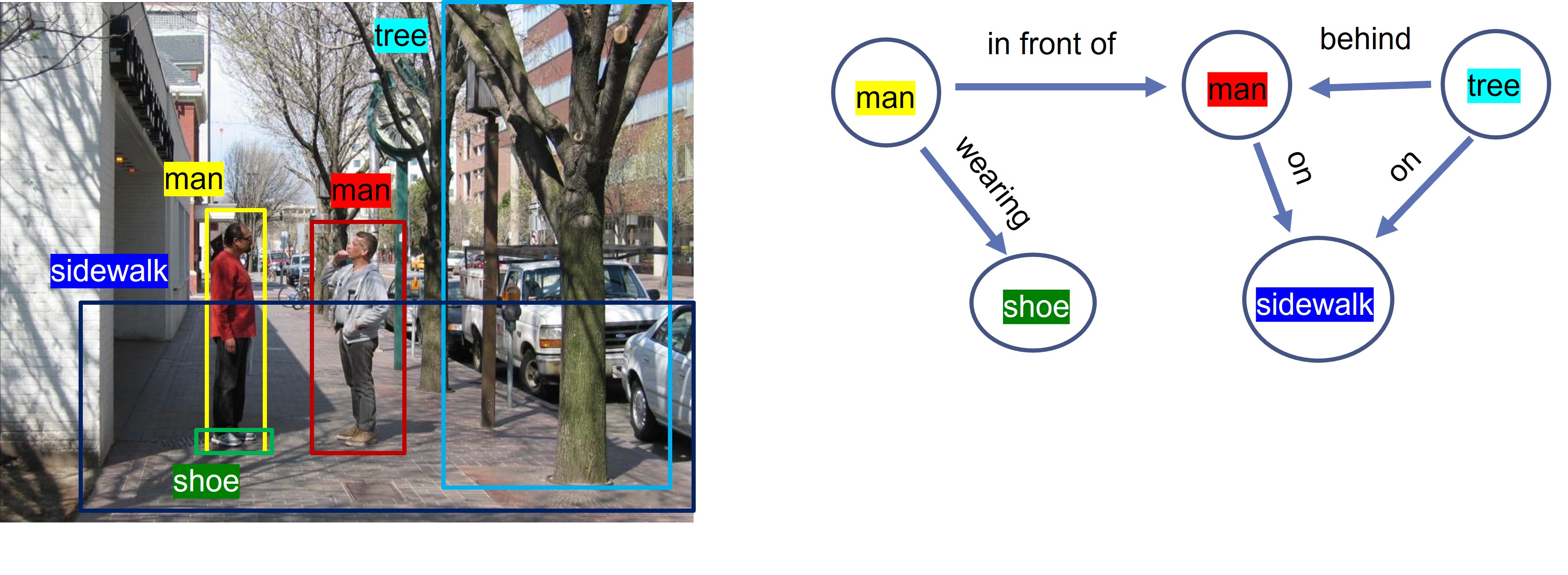

Objects in an image are not stand-alone entities and different objects exhibit different relationships among them. As shown in Fig. 1.1, there are different objects in the image such as man, shoes, sidewalk, and tree. These objects are inter-related with one another through relationships such as 'man-wearing-shoe' or 'tree-on-sidewalk'. We can graphically encode these relationships into a graph where the nodes denote the objects and edges denote relationships. This concise graphical representation is denoted as Scene Graph (SG) which acts as a bridge between low-level computer vision tasks such as object detection and high-level cognition tasks such as visual question answering. Scene Graph Generation (SGG) entails generating such scene graph from an input image.

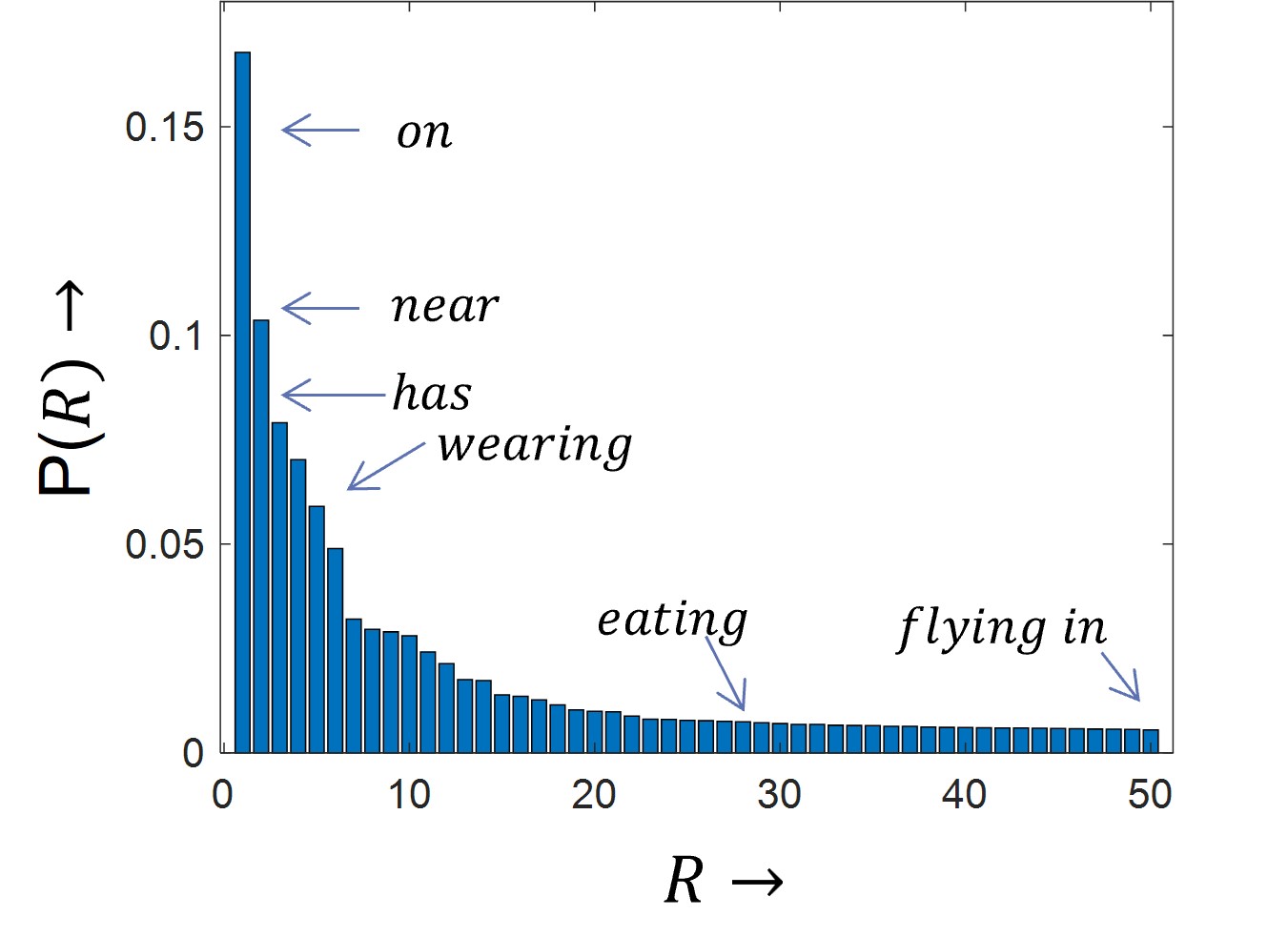

Benchmark datasets of scene graph such as Visual Genome or GQA exhibit long-tailed distribution of relationships. As a result, the Maximum Likelihood Estimation (MLE) based training of scene graph models optimizes the models to favour the most favoured relationships and perform poorly on the tail of the relationships.

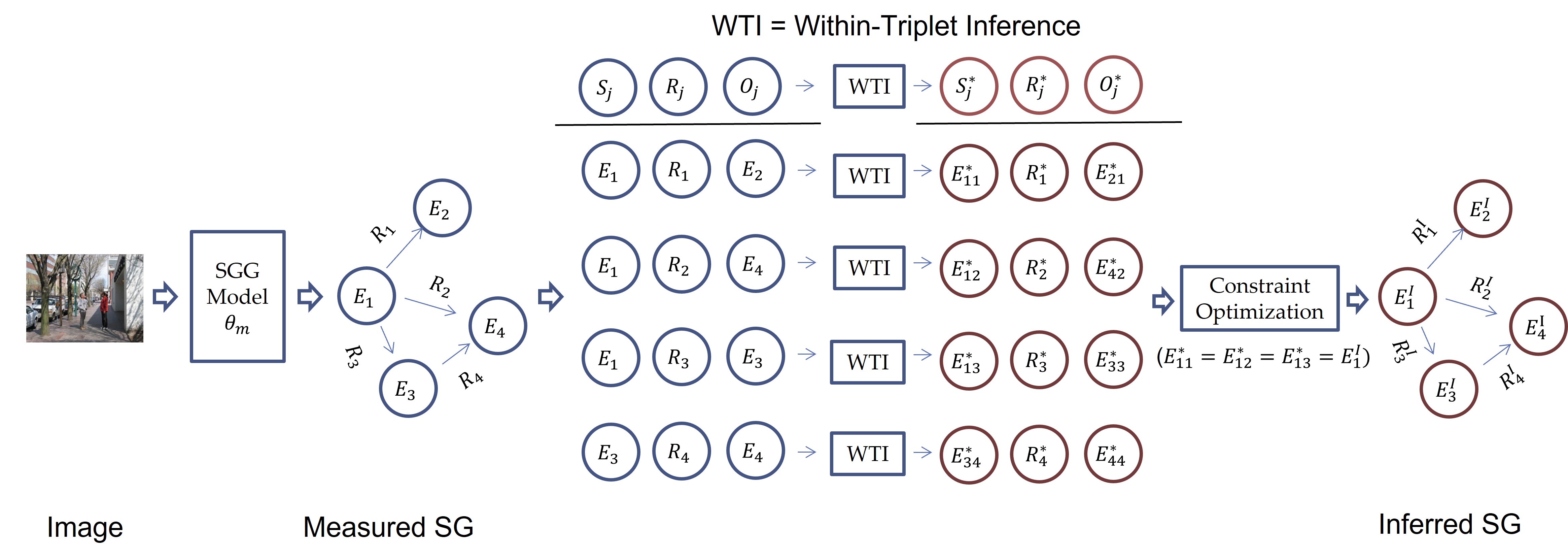

We address this long-tailedness by proposing a post-processing methodology which combines uncertain evidence of triplets of an image scene graph with the triplet prior to generate a debiased version of the scene graph. In our proposed method, we hurt the majority classes significantly less compared to previous debiasing works while improving the minority ones.

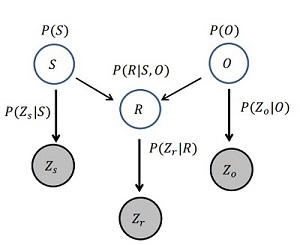

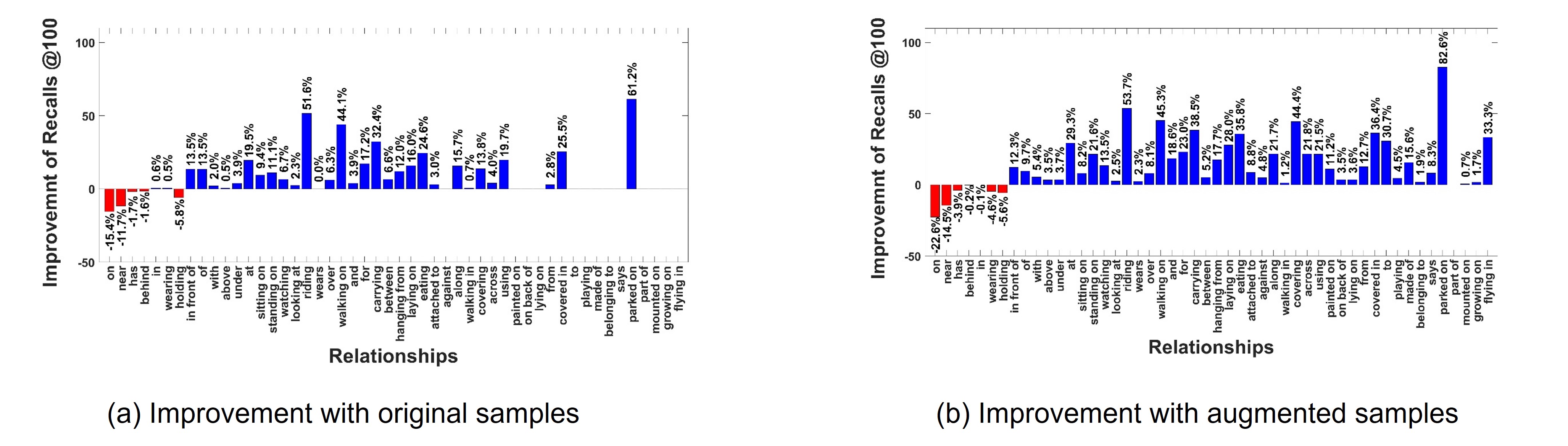

The Within-triplet Bayesian network encodes the uncertain measurements of triplets as virtual evidence of a V-structured Bayesian network. The performance of inference with this Bayesian network are evaluated through learning with original training samples and with embedding-based augmented samples.

We observer improvement of minority classes while worsening the majority classes. In case of original samples, we observe the extreme 'tail' classes do not improve since they are almost non-existent in the training samples compared to the 'mid' and 'head' classes. After augmenting with embedding based similarity, we gain signifcant improvements in those classes which showcases the effectiveness of augmenetation.

Bashirul Azam Biswas and Qiang Ji. Probabilistic Debiasing of Scene Graphs. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 [PDF]

Kaihua Tang, Yulei Niu, Jianqiang Huang, Jiaxin Shi, Hanwang Zhang. Unbiased Scene Graph Generation from Biased Training. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 3716-3725 [PDF]

Meng-Jiun Chiou, Henghui Ding, Hanshu Yan, Changhu Wang, Roger Zimmermann, Jiashi Feng. Recovering the Unbiased Scene Graphs from the Biased Ones. Proceedings of the 29th ACM International Conference on Multimedia (ACMMM), 2021, pp. 1581–1590 [PDF]

Lin Li, Long Chen, Yifeng Huang, Zhimeng Zhang, Songyang Zhang, and Jun Xiao. The Devil is in the Labels: Noisy Label Correction for Robust Scene Graph Generation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 [PDF]

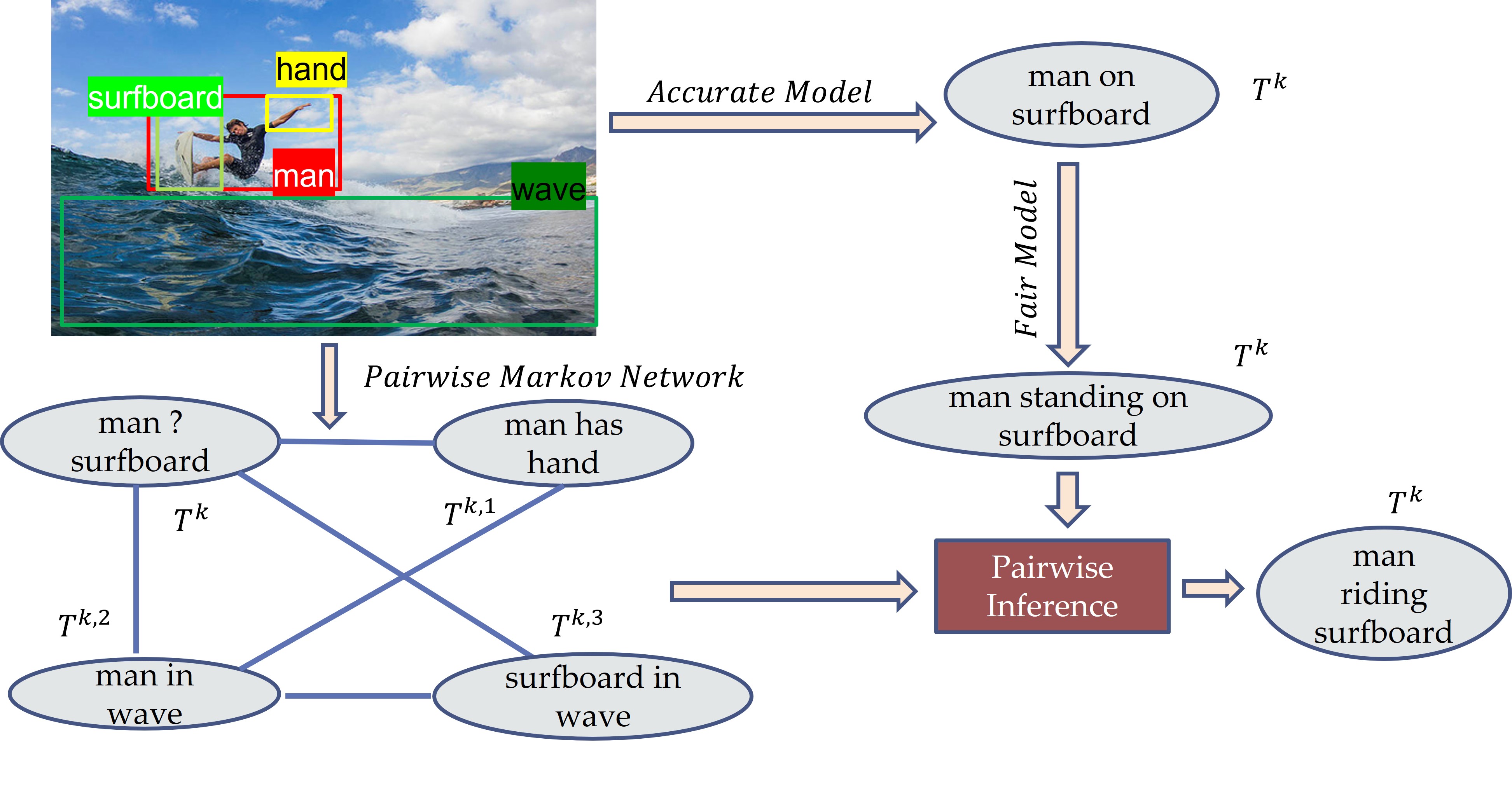

The debiasing technique of scene graphs generate fair scene graphs by sacrificing accuracy. This project aims to improve the accuracy of a fair model by incorporating a pairwise markov network.

This project focuses on improving the performance of dynamic scene graph through incorporation of prior and transition probability in the framework of Dynamic Bayesian Network.