Efficient Effects-based Military Planning with

Dynamic Influence Diagram

Sponsored by Army Research Office

Effects-based operations (EBO) has become an

increasingly important warfighting concept for military planning, execution,

and assessment. Unlike the conventional

destruction-centric and attrition-based approach, EBO is outcome-centric,

focusing primarily on the achievement of a desired end state through the

application of a full spectrum politico-military resources to conflict

resolution. In this research, we propose to apply the EBO concept for military

plan modeling and assessment.

Significant technical challenges, however, remain for the systematic

implementation of this concept for military planning. First, a major issue facing military planning

is the huge amount of uncertainty. Uncertainties exist in the sensory

observations, in the actions, in their outcomes, and in the effect

assessment. A probabilistic framework is

therefore needed that can systematically capture these uncertainties, propagate

them, and quantify their effects on a military plan. Second, the utility of a military plan

usually varies with time since the effects of its constituent actions vary with

time. It is therefore crucial to

explicitly and dynamically model the actions, their outcomes, and their

relationships. Third, action effects must be systematically propagated through

time and through different levels of military structures in order to evaluate

their impact on the campaign objective. Finally, for military planning, it is

crucial to identify the optimal campaign strategy in a timely and efficient

manner.

In this research, we propose methods to

systematically tackle these issues. First, towards the first three challenges,

we propose a unified probabilistic framework based on the Dynamic Influence

Diagram to systematically represent the causal relationships between actions

and their effects, their interactions, their uncertainties, and their

dynamics. In addition, the framework

provides a mechanism for systematic propagation of the uncertainties and the

action effects throughout the network over time. Second, given the proposed framework, we

introduce two complementary approaches aiming at achieving efficient military

plan analysis. Specifically, we first propose a graph-theoretic approach to

effectively reduce search space by eliminating a large number of unlikely plans

from further consideration based on the synergies among the selected sensors.

We then propose a factorization procedure to significantly reduce the

evaluation time for each plan by factoring out the common computation so they

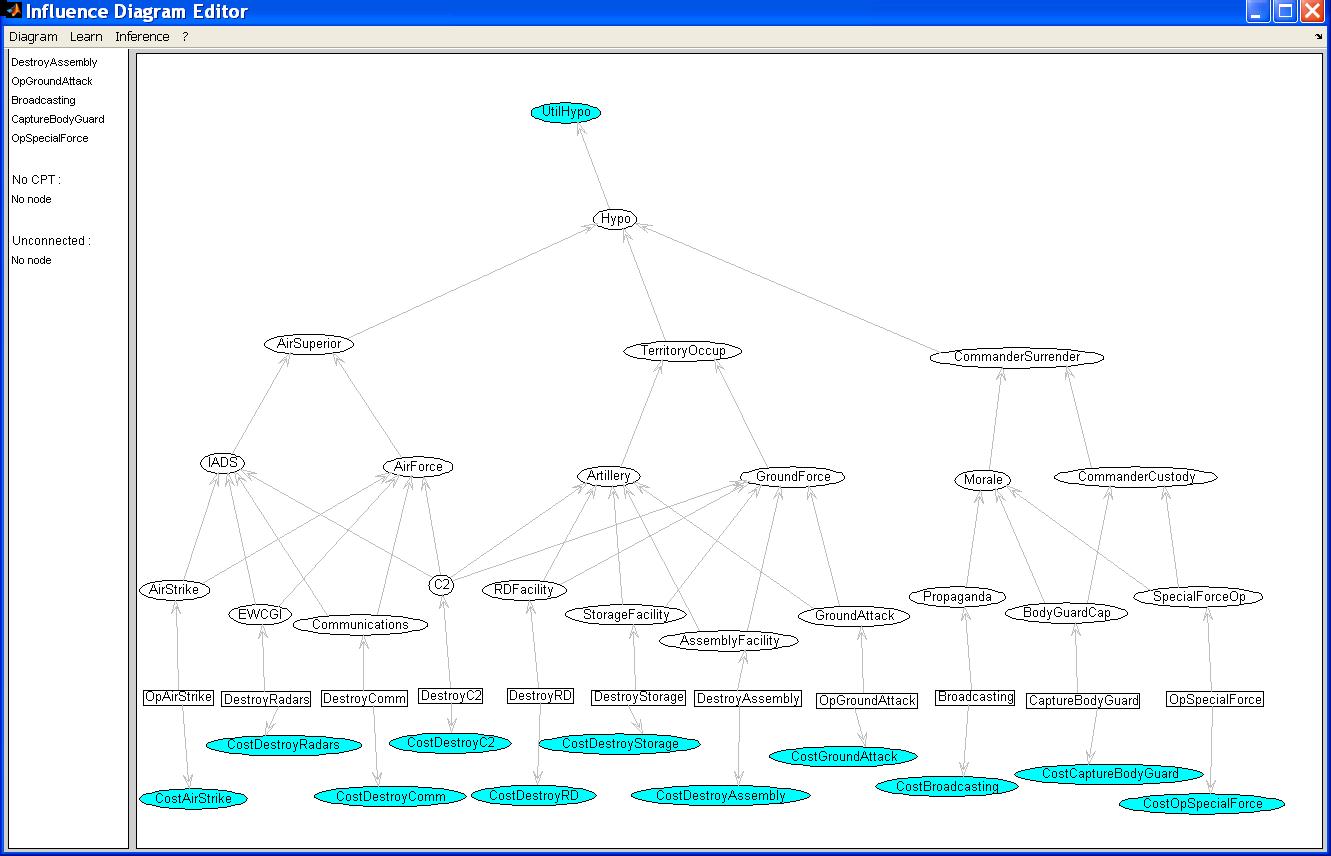

only need be computed only once. Below

is an example Influence Diagram for Effects-based military planning

Software

We have developed a software that can easily construct an EBO

model, automatically learn its parameters from training data and the related

expert knowledge, and perform efficient evaluation and selection of different

plans. Below is an example EBO model

constructed by the software.

An example Influence Diagram

for Modeling Effects-based Military Planning, where the leaf nodes represent

the various military actions that can be included in a military plan as well as

their respective utilities, the intermediate nodes representing the effects of

certain military actions as well as means to evaluate the effects, and top node

representing the goal of the military campaign.

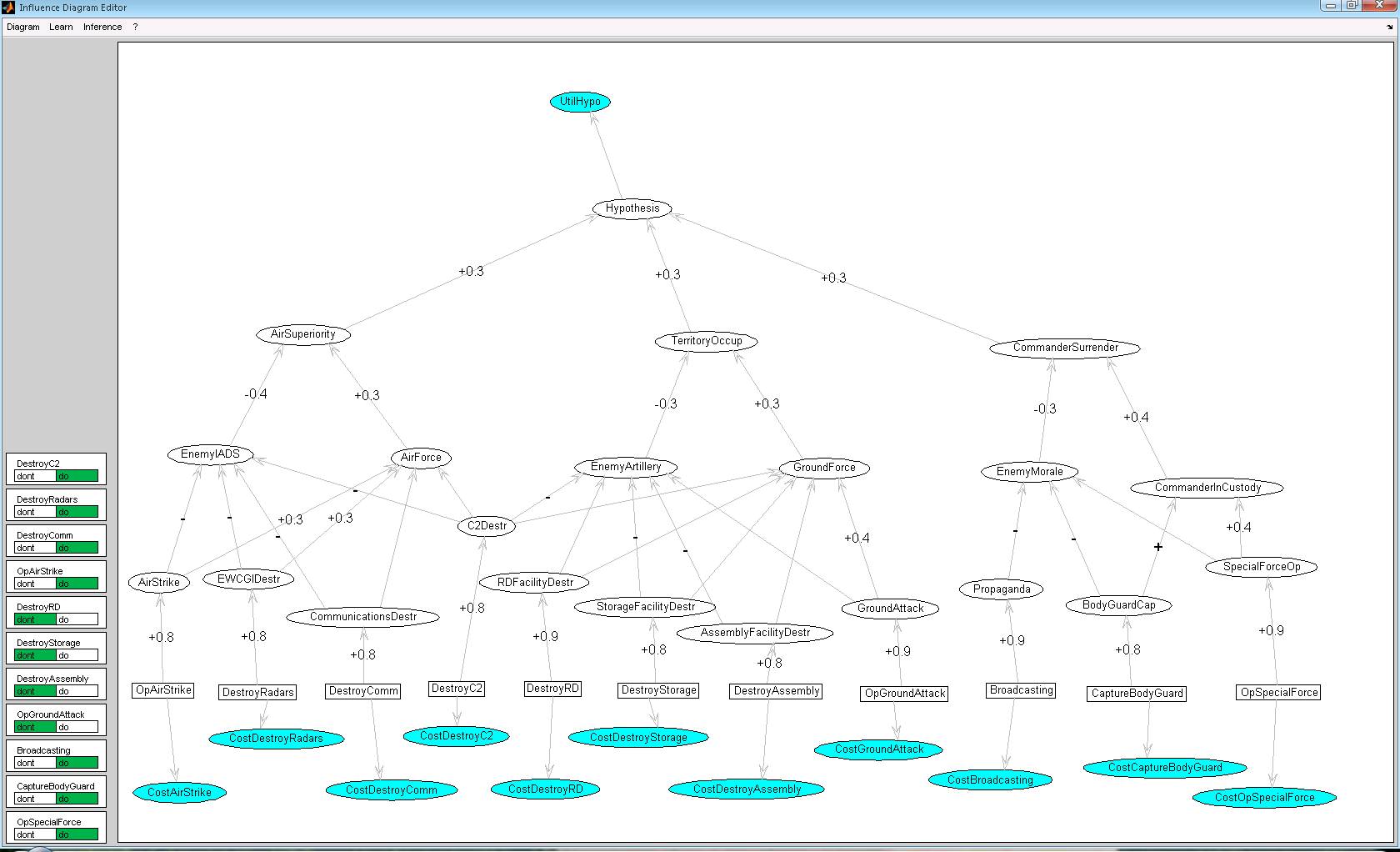

Results of ID evaluation using our software with the

selected actions shaded green

Related Publications:

1)

Cassio

de Campos, Yan Tong, Qiang Ji, Constrained Maximum Likelihood Learning of Bayesian

Networks,

European Conference on Computer Vision (ECCV), 2008.

2) Yan Tong and Qiang Ji, Learning Bayesian Networks with Qualitative Constraints, IEEE

Conference on Computer Vision and Pattern Recognition (CVPR), 2008.

3) Cassio de Campos and Qiang

Ji, Improving Bayesian Network

Parameter Learning using Constraints, International Conference in

Pattern Recognition (ICPR), 2008.

4)

Wenhui Liao and Qiang Ji, Exploiting Qualitative Domain Knowledge for

Learning Bayesian Network Parameters with Incomplete Data, International

Conference in Pattern Recognition (ICPR), 2008.

Above

papers introduce different methods for learning a probabilistic graphical model

(e.g. influence diagram) based on a combination of limited training data with

any types of linear constraints derived from various sources including domain

experts.

5) Cassio de Campos and Qiang

Ji, Strategy Selection in Influence Diagrams using Imprecise Probabilities, the

24th Conference on Uncertainty in Artificial Intelligence (UAI), 2008.

This

paper introduces a new method for influence diagram evaluation to identify the

optimal strategy (e.g. military plan)

6) Weihong Zhang and Qiang Ji, A Factorization Approach

To Evaluating Simultaneous Influence

Diagrams,

IEEE Transactions on Systems, Man, and Cybernetics A, p746-754, Vol. 36, No. 4,

July, 2006

This

paper introduces a new factorization method for performing efficient influence

diagram evaluation.

Collaboration

We are actively seeking collaboration from different fields,

especially from the military, who are interested in evaluating and applying our

model and methods to their specific applications. If interested, feel free to contact Dr.

Qiang Ji at qji@ecse.rpi.edu .